I was a campus pastor at Michigan State University from 1981-1992. In our ministry we had students representing many academic disciplines. All (nearly) the sciences were represented, the humanities, the arts, you name it.

Linda and I got to know these students. What a blessing that was. And, what a learning experience.

One of our students, James, was completing his PhD in computer science. His doctoral dissertation was on computer vision. I remember asking James to explain this to me. I confess to not understanding a lot of this. I also remember thinking of a connection between what James was doing, and what French philosopher Merleau-Ponty was doing in his book Phenomenology of Perception. I lent James my copy of the book. He told me it was helpful. He integrated some of Merleau-Ponty into his dissertation. (James, if you are reading, this is how I remember it.)

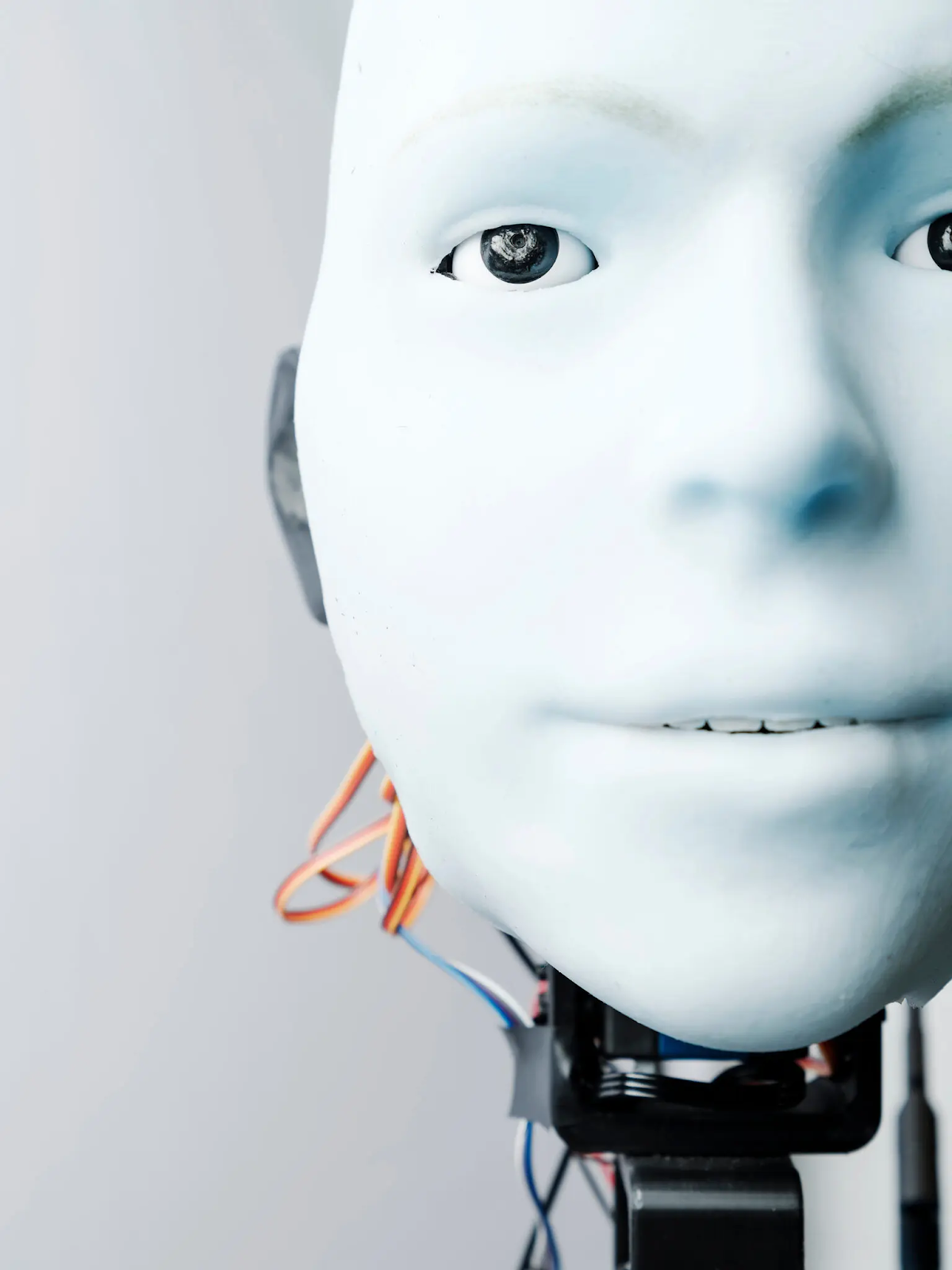

Merleau-Ponty's book concerns the nature and role of the human body, as an integrated system, in the act of perception. What, Merleau-Ponty asks, does it mean to see, to perceive? The analysis of this kind of meta-activity is common in the phenomenological stream indebted to Heidegger. Because perception necessarily involves, not just the eyes and brain, but the human body in which eyes and brain are dependent on, just what would this mean, if anything, as regards a computer's body when it comes to computer vision?

I considered James to be a brilliant young scholar. One rich part of our discussions was the question of whether or not a computer could be self-conscious. We both, as I remember it, thought the answer to this question was "no," in principle. I think of such things today, as I read the New York Times story "'Consciousness' in robots was once taboo. Now It's the Last Word."

The NYTimes article says, "The first difficulty with studying the c-word ['consciousness,' that is] is that there is no consensus around what it actually refers to."

Ahhh... That seems to be important. When James shared his project with me I was fascinated. I thought, in a dissertation on computer vision, James will first need to define and understand 'vision.'

"Trying to render the squishy c-word using tractable inputs and functions is a difficult, if not impossible, task. Most roboticists and engineers tend to skip the philosophy and form their own functional definitions. Thomas Sheridan, a professor emeritus of mechanical engineering at the Massachusetts Institute of Technology, said that he believed consciousness could be reduced to a certain process and that the more we find out about the brain, the less fuzzy the concept will seem. “What started out as being spooky and kind of religious ends up being sort of straightforward, objective science,” he said."

Dr. Hod Lipson, a mechanical engineer who directs the Creative Machines Lab at Columbia University, has settled on a practical criterion for consciousness: the ability to imagine yourself in the future.

I am an indeterminist when it comes to consciousness. That is, it's not the kind of consciousness humans have (self-consciousness) unless it is not fully reducible to antecedent causal conditions. Which a robot, or a computer, would need to be to qualify for consciousness (again, the kind humans have).

The NYTimes article understand this. We read,

"...self-awareness seems important, but aren’t there other key features of consciousness? Can we call something conscious if it doesn’t feel conscious to us?

Dr. [Antonio] Chella believes that consciousness can’t exist without language, and has been developing robots that can form internal monologues, reasoning to themselves and reflecting on the things they see around them. One of his robots was recently able to recognize itself in a mirror, passing what is probably the most famous test of animal self-consciousness."

An indeterminist view of human consciousness demands that a conscious being is not fully indebted, or determined, by its programming. For example,

"This summer a Google engineer claimed that the company’s newly improved chatbot, called LaMDA, was conscious and deserved to be treated like a small child. This claim was met with skepticism, mainly because, as Dr. Lipson noted, the chatbot was processing “a code that is written to complete a task.” There was no underlying structure of consciousness, other researchers said, only the illusion of consciousness. Dr. Lipson added: “The robot was not self aware. It’s a bit like cheating.”"

***

For a brilliant philosophical take on consciousness, see J. P. Moreland, Consciousness and the Existence of God.

And, google "the hard problem of consciousness."